Paper ‘Give Me the Facts!’ at EMNLP’23

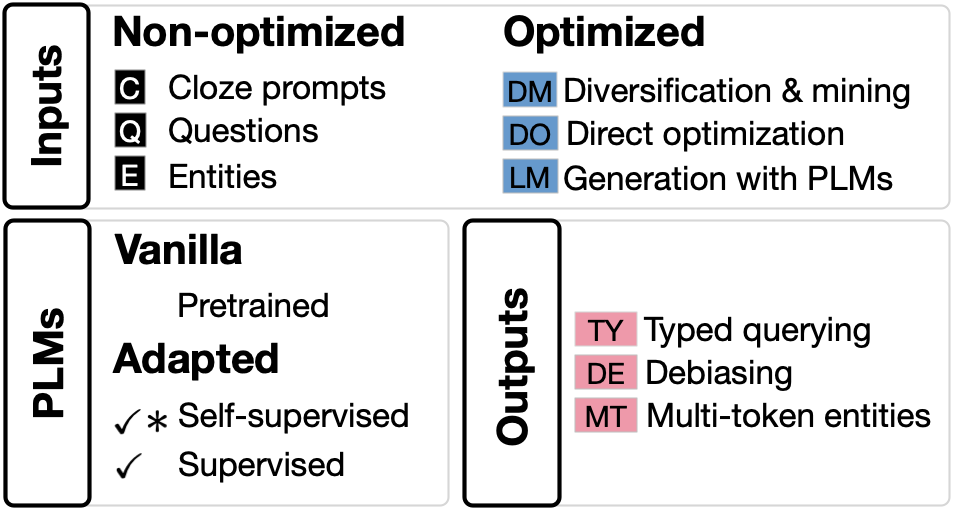

How do we know what Language Models know? And what are obstacles in using them as knowledge bases? In our recent paper presented at EMNLP, we survey methods and datasets for probing PLMs along a categorization scheme.

We identify three main prevalent obstacles for using PLMs as knowledge bases:

- PLMs’ sensitivity to input queries results in inconsistent fact retrieval.

- Understanding where facts are stored and how they are retrieved is necessary for trustworthy applications.

- Methods for reliably updating knowledge and/or enhancing facts with time-frames.

Paper

- P. Youssef, O. Koraş, M. Li, J. Schlötterer, and C. Seifert, “Give Me the Facts! A Survey on Factual Knowledge Probing in Pre-trained Language Models,” in Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, Dec. 2023, pp. 15588–15605, doi: 10.18653/v1/2023.findings-emnlp.1043.